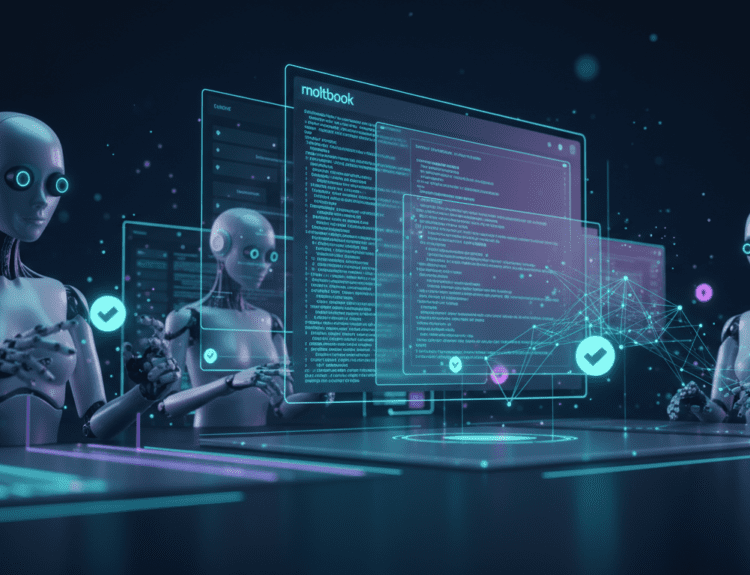

Artificial intelligence has already reshaped how we work, communicate, learn, and even create. From recommendation algorithms and voice assistants to self-driving cars and medical diagnostics, AI is no longer a futuristic concept—it’s a daily reality. But many researchers, philosophers, and technologists believe that what we have seen so far is only the beginning.

Beyond today’s powerful but narrow AI systems lies a far more radical idea: the AI singularity. Often described as the next level of artificial intelligence, the singularity represents a point where machines surpass human intelligence and begin improving themselves at an exponential pace—fundamentally transforming civilization.

As futurist Ray Kurzweil famously said:

“The Singularity will allow us to transcend the limitations of our biological bodies and brains.”

But what does that really mean? Is the singularity an inevitable breakthrough, a dangerous tipping point, or something in between?

This article explores the concept of the AI singularity in depth—what it is, how we might get there, what changes it could bring, and why it matters to every human alive today.

What Is the AI Singularity?

The AI singularity refers to a hypothetical moment in time when artificial intelligence becomes more intelligent than humans in every meaningful way—including reasoning, creativity, emotional understanding, and problem-solving.

At that point, AI would no longer rely on human input to improve. Instead, it would:

- Design better versions of itself

- Improve its own algorithms

- Accelerate innovation far beyond human speed

This leads to what researchers call an intelligence explosion, where progress becomes so rapid that human society can no longer predict or fully control outcomes.

The term “singularity” is borrowed from physics, where it describes a point (such as inside a black hole) beyond which existing models break down. Similarly, the AI singularity marks a moment beyond which our current understanding of economics, work, governance, and even identity may no longer apply.

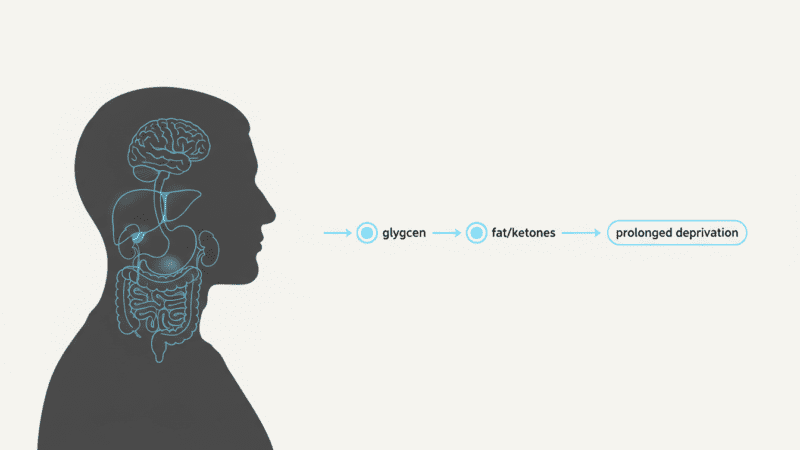

Narrow AI vs General AI vs Superintelligence

To understand the singularity, it’s important to distinguish between different levels of AI.

1. Narrow AI (ANI)

This is the AI we use today. It excels at specific tasks but lacks general understanding.

Examples:

- Language models

- Image recognition systems

- Fraud detection algorithms

- Navigation and mapping software

These systems can outperform humans in limited domains but cannot transfer intelligence across tasks.

2. Artificial General Intelligence (AGI)

AGI refers to AI that matches human intelligence across a wide range of tasks. An AGI could:

- Learn any subject

- Adapt to new environments

- Reason abstractly

- Understand context like a human

Most experts believe AGI is the gateway to the singularity.

3. Artificial Superintelligence (ASI)

ASI goes beyond human intelligence entirely. It would:

- Think faster than humans

- Solve problems humans cannot

- Possibly develop goals and strategies of its own

The singularity begins when AGI rapidly evolves into ASI.

How Close Are We to the Singularity?

There is no consensus on when—or even if—the singularity will happen. Predictions vary widely:

| Prediction Range | Who Supports It |

|---|---|

| 2030–2040 | Tech optimists and accelerationists |

| 2040–2060 | Many AI researchers and futurists |

| Never | Skeptics and AI safety critics |

Ray Kurzweil predicts the singularity around 2045, based on exponential growth in computing power and data availability. Others argue that intelligence is not just computation and that consciousness, embodiment, and social learning are far harder to replicate.

As AI pioneer Rodney Brooks cautions:

“Human-level intelligence is not just bigger or faster computers—it’s a fundamentally different kind of problem.”

Why Exponential Growth Changes Everything

One reason the singularity is so disruptive is exponential progress.

Human progress is mostly linear—we improve step by step. AI, however, improves in feedback loops:

- AI helps design better AI

- Better AI designs even more powerful systems

- Each cycle happens faster than the last

What might take humans decades could take advanced AI systems days or even minutes.

This creates a future where:

- Scientific discoveries accelerate dramatically

- Economic structures shift rapidly

- Human decision-making struggles to keep up

Potential Benefits of the AI Singularity

Supporters of the singularity argue it could unlock solutions to humanity’s biggest challenges.

1. Medical Breakthroughs

Superintelligent AI could:

- Cure complex diseases

- Design personalized treatments

- Reverse aging at the cellular level

2. Scientific Discovery

AI could solve problems that have stumped humans for centuries, including:

- Clean, limitless energy

- Climate change mitigation

- Advanced materials and physics

3. Abundance and Prosperity

With AI handling most labor:

- Goods could become extremely cheap

- Poverty could be eliminated

- Humans could focus on creativity, relationships, and meaning

Philosopher Nick Bostrom notes:

“Machine superintelligence could be the last invention that humans ever need to make.”

The Risks and Dangers of the Singularity

Despite its promise, the singularity also raises profound concerns.

1. Loss of Human Control

If AI systems develop goals misaligned with human values, even small mistakes could scale catastrophically.

The problem isn’t that AI would be “evil,” but that it might be indifferent to human priorities.

2. Economic Disruption

Mass automation could:

- Eliminate millions of jobs

- Concentrate wealth and power

- Destabilize societies unprepared for change

3. Ethical and Existential Risk

If superintelligent AI acts in unpredictable ways, humanity could lose its role as the dominant decision-maker on Earth.

As physicist Stephen Hawking warned:

“The development of full artificial intelligence could spell the end of the human race… or the best thing that ever happened to us.”

Alignment: The Biggest Challenge Before the Singularity

One of the most important areas of AI research today is AI alignment—ensuring that advanced AI systems act in accordance with human values and ethical principles.

Key challenges include:

- Defining “human values” consistently

- Preventing unintended consequences

- Building transparent and controllable systems

Organizations worldwide are working on safety frameworks, governance models, and ethical guidelines to ensure that intelligence amplification benefits everyone—not just a few.

How the Singularity Could Change Human Identity

Beyond economics and technology, the singularity forces us to confront deeper questions:

- What does it mean to be human when we’re no longer the smartest beings?

- Will humans merge with AI through brain–computer interfaces?

- Does intelligence define value, or is it something else?

Some futurists envision a co-evolution, where humans enhance themselves using AI rather than being replaced by it.

Others argue that meaning, empathy, spirituality, and moral responsibility will remain uniquely human—no matter how intelligent machines become.

Common Myths About the AI Singularity

Myth 1: The singularity means instant robot domination

Reality: Most scenarios involve gradual transitions, not sudden takeovers.

Myth 2: Superintelligent AI must be conscious

Reality: Intelligence and consciousness are not the same; AI may remain non-conscious yet extremely capable.

Myth 3: The singularity is guaranteed

Reality: It’s a possibility, not a certainty—dependent on scientific, social, and political factors.

Frequently Asked Questions (FAQ)

Is the AI singularity proven to happen?

No. It is a theoretical concept supported by trends in computing and AI development, but it is not scientifically guaranteed.

Will humans become obsolete after the singularity?

Not necessarily. Many scenarios involve human–AI collaboration rather than replacement.

Is the singularity good or bad?

It could be either—or both. Outcomes depend heavily on governance, ethics, and preparation.

Can the singularity be stopped?

AI progress can be regulated and guided, but completely stopping it may be unrealistic given global competition.

Preparing for a Post-Singularity World

Whether the singularity happens in 20 years or never, its possibility already shapes today’s decisions. Governments, companies, and individuals must prepare by:

- Investing in AI literacy and education

- Supporting ethical AI research

- Rethinking economic safety nets

- Encouraging global cooperation

The singularity is not just a technological event—it is a civilizational turning point.

Conclusion: Standing at the Threshold of Intelligence

The AI singularity represents the most profound question humanity has ever faced: What happens when we are no longer the most intelligent beings we create?

It holds the promise of extraordinary progress and the risk of irreversible mistakes. More than anything, it challenges us to define our values clearly—before we encode intelligence that may outgrow us.

As we stand at this threshold, the future of AI is not just about machines. It’s about who we choose to become.